I was staring at my screen, deep in the 'dip' of designing a workshop for 135 global leaders. You know that moment - when nothing hangs together, everything feels overbuilt, and you have absolutely no idea if it'll work when real people show up.

Then a realisation struck me: my highest-impact AI use case wasn't content creation or data analysis - it was getting AI to be my audience. Hundreds of times over.

At Wavetable, we believe learning should feel like play, experimentation, and good vibes. Most of our work has a game-like quality to it. And for any game to work - whether board game, video game, or sports game - players need to be able to play it.

To ensure that, you playtest. Repeatedly. Rigorously. Relentlessly.

But what if you don't have enough playtesters available?

What if your target audience is scattered across time zones, or you need feedback at 3am when inspiration strikes? You use a GPT.

In the next few minutes, I'll show you exactly how we used custom GPTs to simulate hundreds of workshop participants, eliminate design flaws before they happened, and deliver a flawless experience that required zero mid-workshop rescues.

You’ll discover:

- Our three GPT iterations

- What our Digital Playtesters do

- Our core Test Suite

- Pitfalls to avoid

- How you can apply this in your own projects

This approach has transformed how we design experiences at Wavetable, and it might just do the same for you.

The Evolution of our AI Playtesting

Our approach has evolved through three distinct versions:

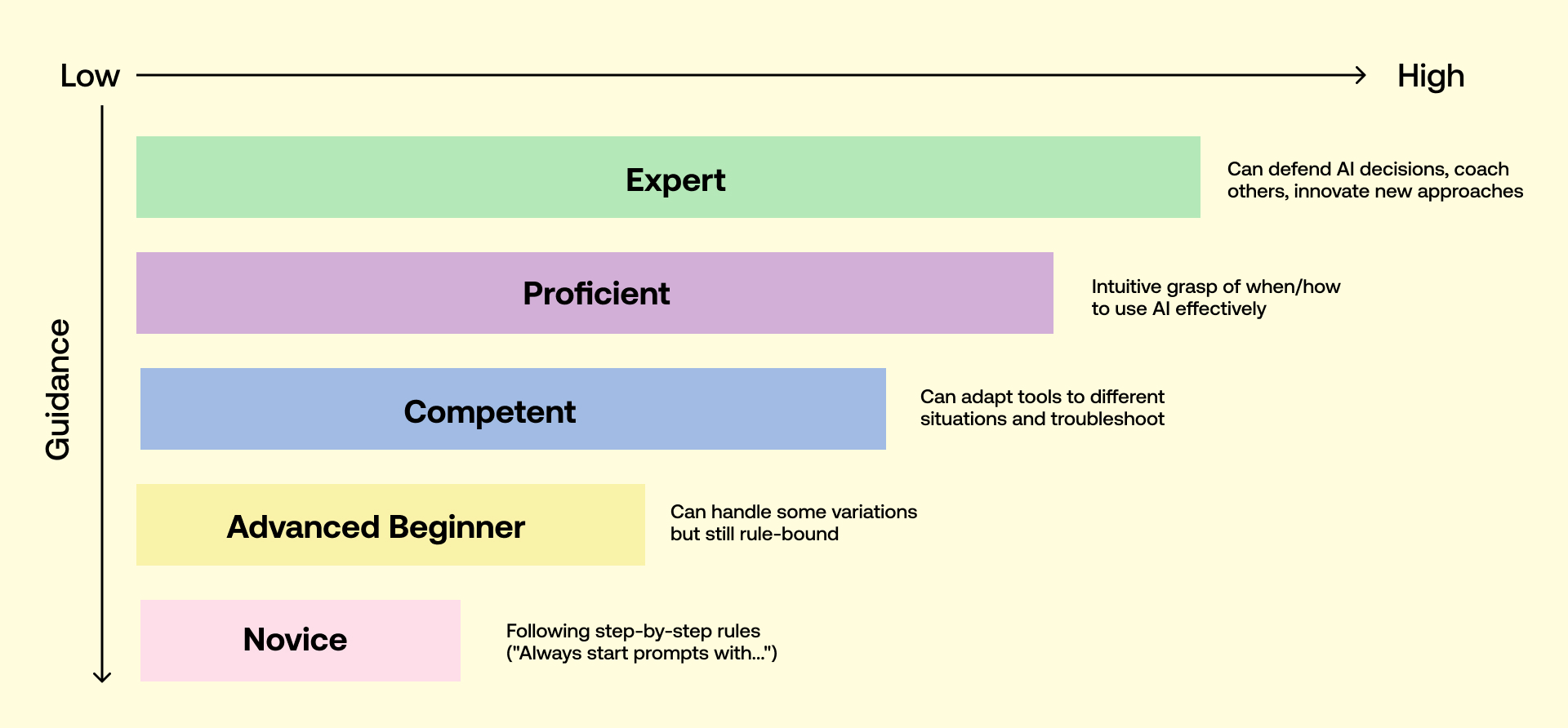

V1: The Borrower

We fed simple user personas to our existing Wavetable Experience Design GPT. This GPT is equipped with our Learning Design best practices and previous project files. We’ll create a project for the game we’re testing, give that GPT the specific project files, and then use that for testing. Simple but effective.

V2: The Specialist

We’re now in the early stages of using a custom GPT create specifically for playtesting - more focused, more insightful.

V3: The Bespoke

Next are GPTs specifically for each project and audience - tailored perfectly to the task at hand.

What our digital players do

In each of our versions, we get the GPT to spin up:

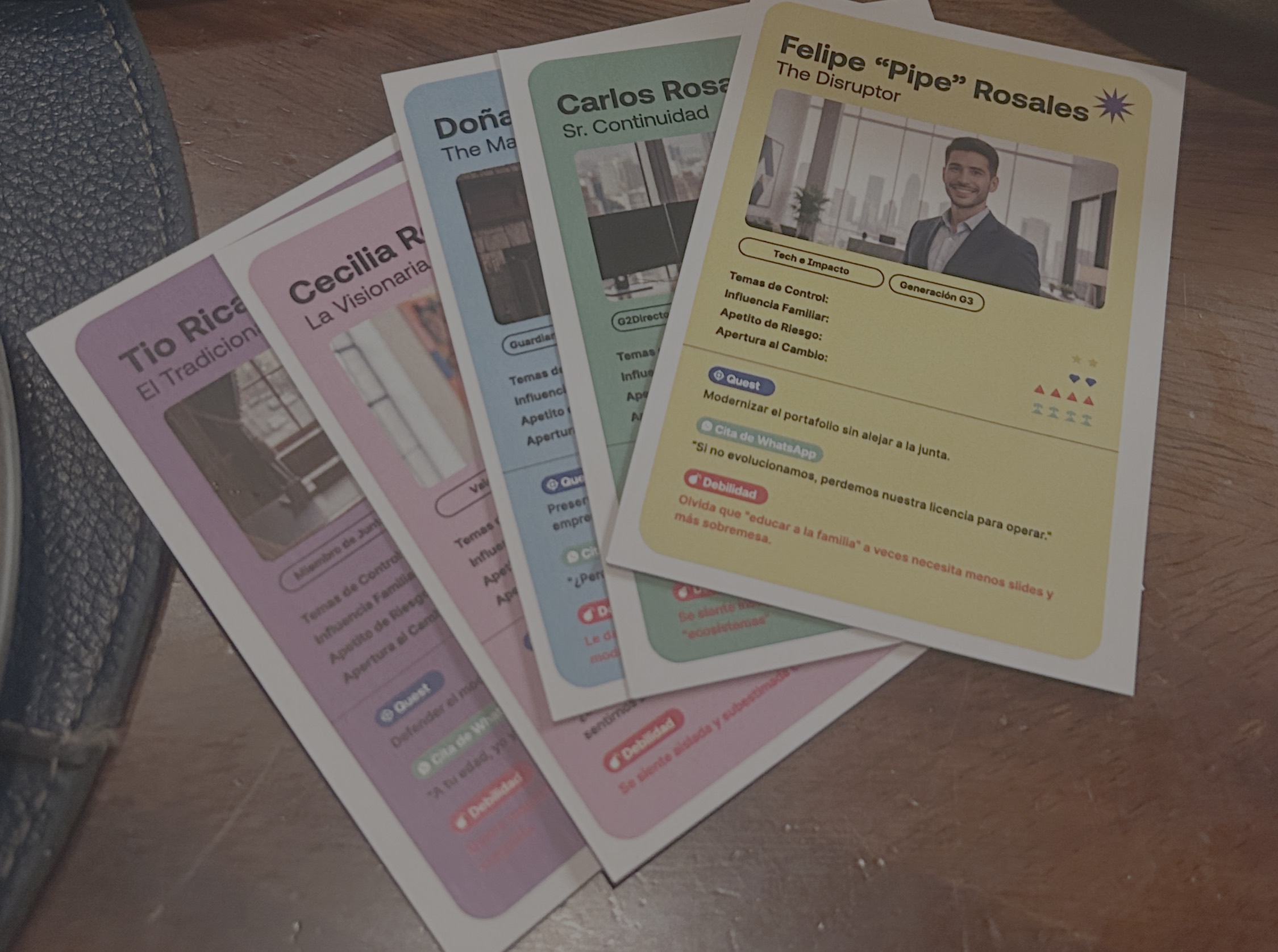

- Personas that match our target audience (there's a whole methodology to how we develop these, which I'll share in an upcoming post)

- Variations on these personas (different backgrounds, motivations, technical abilities)

- Groups containing these variations (the introverts, the skeptics, the enthusiasts - I’ve also started playing with incorporating Enneagram types into this)

- Different sized groups (pairs, trios, quintets, etc.) to test dynamics

- Groups with specific challenges (someone with internet issues, someone joining late)

From this, we have a significant set of use cases we can apply to the game design.

Then comes the magic: we get the GPT to playtest what we've built. Both the entire activity (say, a 45-minute scenario) and every stage of it - from the pre-read to the first 5 minutes, to completion.

What we test for

Our test suite includes:

- Time required (Is our 20-minute activity actually taking 35?)

- Conversation elements (Are discussions flowing naturally?)

- Ease of understanding (Are instructions clear enough for first-timers?)

- Language clarity (Especially for non-native English speakers)

- Engagement points (Where do people lean in? Where do they check out?)

- Edge cases (What happens when someone misinterprets a key instruction?)

With minimal setup, we can have the GPT test a single game element dozens of times, with dozens of different persona combinations. It then recommends adjustments to the design that we can think through and implement (or not) - before starting the loop once more.

Real impact

This process has helped us spot more than a few blindspots, making the user experience significantly better - especially in new and unknown situations where we're working with large groups of people we don't know.

The activity that may have confused 20% of participants now flows seamlessly. The activity that seemed brilliant in theory but fell flat in practice gets refined before anyone experiences the flop.

Playtesting in Action

That set of 135 leaders? They all worked at a global advertising holding company, and were part of a key professional development program. They represented multiple roles (e.g. account director, head of finance, creative director), across multiple agencies (e.g. media, creartive, data), across multiple locations (e.g. UAE, Spain, Denmark etc). Phew!

We’d never met any of them before, and while we were able to do some stakeholder interviews, they weren’t available to play test directly - nor cover the range of use cases we had.

By running our GPT PlayTest Process, we starched out risk, improved the game dyanmics, and ended up with a total of zero teams calling out for help, across 135 participants.

Challenges we're still navigating

Like any tool, our AI playtesters aren't perfect:

- Identity crisis: The GPT occasionally refers to itself and loses track of a persona set

- Memory limitations: Conversations extending beyond 20 exchanges can get muddled - this requires good old (human!) critical thinking

- PDF problems: We need to repeatedly prompt the AI to reference PDFs accurately

- Visual blindness: Stripping visual assets from PDFs helps comprehension, but means manually adding context about design and navigation - which can be fiddly for our team

How to get started

If you're interested in using custom GPTs for playtesting your learning experiences, here are three ways to begin:

1. Start simple: Use ChatGPT to create 3-5 basic personas representing your target audience. Ask it to role-play these personas interacting with a simple activity. Even this basic approach can reveal surprising insights.

2. Create a project file: Document your activity's instructions, goals, and context in a simple text file or PDF. This becomes the foundation that your AI playtester will reference.

3. Run focused tests: Rather than testing everything at once, start by examining specific elements - like instructions, timing, or group dynamics - to get actionable feedback without overwhelm or hallucination.

Remember, the goal isn't to replace human testing entirely but to iterate more quickly before putting your design in front of real people.

The Road Ahead

The rough edges are there, but the value is undeniable.

We're working on more sophisticated persona libraries, better tracking of conversation flows, and more nuanced feedback mechanisms.

Perhaps the most exciting aspect? This approach democratizes good design. Teams without access to extensive user testing resources can still create experiences that resonate deeply with their audiences.

The future? It's Humans+AI.

Curious about implementing this approach with your learning programs?

We're offering a free 30-minute consultation for leaders looking to reduce risk and improve engagement in their workshops.

.svg)